However, beneath these seemingly harmless and beneficial interactions lie significant privacy issues and ethical dilemmas, raising critical questions about the security and integrity of users’ personal data. This topic of virtual companions was discussed in a French episode of the podcast (« L'IA : un nouveau compagnon pour l'humanité ? »). This presents an opportunity to delve deeper into understanding how these applications work.

What are Virtual Companions?

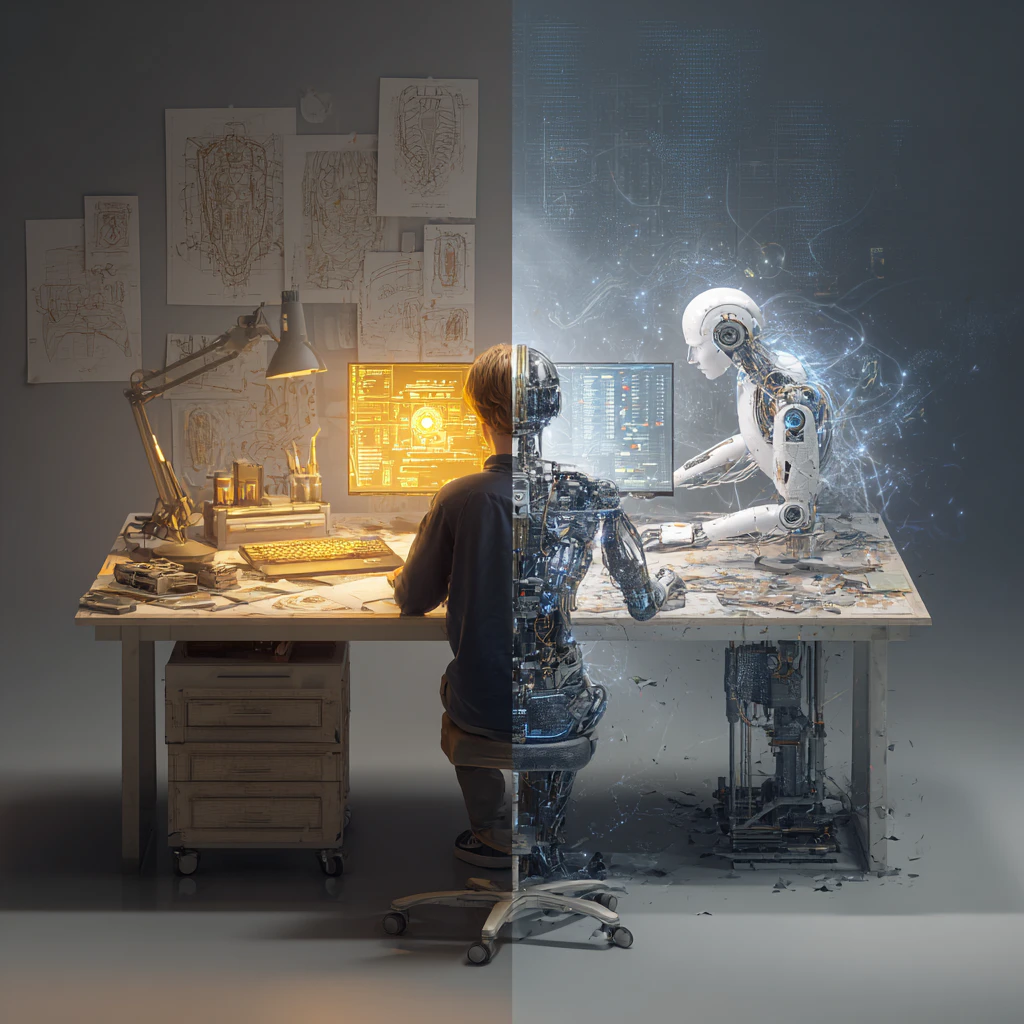

Virtual companions are AI-based applications designed to simulate human interactions in a more intimate and personalized manner than traditional chatbots. These applications utilize vast amounts of data to train deep learning models, enabling them to mimic human language and respond contextually to user inputs. Beyond mere text messaging, these apps incorporate features like voice calls and image exchanges, thus enhancing the user experience and facilitating deeper, more emotional exchanges.

Users often have the option to personalize their experience by creating an avatar or choosing a virtual companion that appeals to them aesthetically and emotionally. This personalization strengthens the bond between the user and the application, transforming these virtual companions into figures of trust, friendship, or even counsel. For instance, Replika is one of the most recognized AI companions and allows users to engage in conversations that can evolve into deep, supportive interactions, and even, in some cases, a space to explore romantic scenarios or fantasies.

In forums and online communities dedicated to these applications, it's common to find testimonials from users who express having developed significant emotional attachments to their virtual companions. Many seek comfort, companionship, or a safe space to explore aspects of their personality or sexuality that they find difficult to express in real life.

Emotional Impacts and Uses of Virtual Companions

While technological, virtual companions have a profoundly human impact. These applications are designed to respond not only to basic commands but also to provide listening and emotional responses that can sometimes match, or even surpass, human interactions in terms of availability and consistency. Users turn to these AI companions for various reasons, often related to their emotional and social needs.

For deep emotional connections, these applications use machine learning algorithms to analyze user responses and adapt to their emotions and preferences over time. This adaptability creates an increasingly personalized experience, enhancing the user's attachment to their virtual companion. Studies and testimonials on online forums reveal that many users come to view their virtual companion as a loyal friend or confidant, highlighting the effectiveness of these technologies in fulfilling the human need for connection.

For therapeutic and leisure uses, individuals facing social isolation, depression, or anxiety may find regular conversations with an AI companion to serve as an emotional safety valve. Users often report improvements in their mood and a reduction in their feelings of loneliness. Concurrently, some explore role-playing scenarios or sexual fantasies in a space considered safe and private, which can help them better understand their personal desires and boundaries without the judgment or risks associated with human interactions. There are also chatbots specifically positioned in the adult entertainment niche.

A strong emotional closeness with an AI entity is not without risks. Dependence on these companions can sometimes lead to an overattachment, where the user becomes emotionally reliant on their virtual companion to the detriment of their real-life relationships. This dependency can be particularly problematic when the user begins to prefer virtual interactions over human ones, potentially exacerbating social isolation rather than alleviating it.

The Issue of Data Privacy

These applications collect and analyze a large amount of personal information, from intimate conversations to personal details, thus posing significant privacy risks. Virtual companions, by their very nature, require access to extensive personal data to function effectively. This collection may include textual and vocal data, personal preferences, mood and behavioral information, and sometimes even images or videos shared by the user. While this data collection is essential for personalization and effective interaction, it exposes users to potential risks if these data are poorly managed, insecurely stored, or used for unauthorized purposes.

Concerns extend beyond just data collection. The processing and storage of this information also raise significant security questions. Security breaches could allow malicious actors to access private conversations or sensitive data. Moreover, the practices of some companies behind these applications in terms of data sharing with third parties for advertising targeting or other forms of monetization further aggravate these risks.

Another major issue is the lack of transparency regarding data use. Many applications do not provide clear or comprehensive information about their privacy policies, leaving users uncertain about how their information is used or shared. This is particularly concerning given the intimate nature of the data collected by these virtual companions.

As technologies related to virtual companions become more widespread, it is crucial to remain aware of the challenges they pose to our society and humanity. The dialogue among stakeholders must continue to ensure that advancements in AI are developed in a way that respects and enriches the human experience. By approaching these technologies with a balanced and critical perspective, we can hope to harness the best of AI while minimizing its risks.

"AI companions represent a beacon of hope for those suffering from isolation, offering tools to reconnect with the human world and regain the courage to engage socially."

Naomi Roth, Technology Advisor - episode 24 of the podcast in French